I'm Brian, a geek, a tinkerer, and sporadic content creator.

I write blogs and create videos about my hobbies: DIY NAS servers, 3D-printing, home automation, and anything else that captures my attention.

Latest Posts

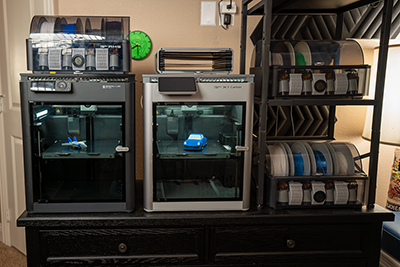

My two 3D printer arrangement didn't quite work out because the Prusa MK3 couldn't keep up with my Bambu Labs X1C Combo. I solved this problem by buying another Bambu Labs 3D printer!

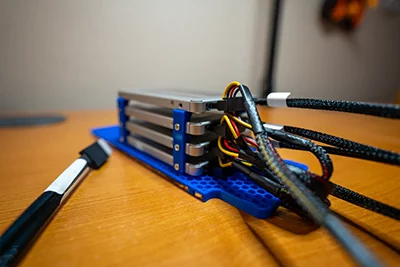

Increasing the capacity of my NAS by 3D-Designing and 3D-printing a drive caddy for 4x 2.5" SSDs. Is an all flash DIY NAS in my future?

My evaluation of an interesting barebones NAS featuring: two 3.5-inch hard drive bays, a M.2 NVMe 2280 slot, an Intel N100 CPU, one DDR4 SODIMM, and two 2.5Gbps NICs.

I've been using Octopress 2.0 for over a decade. The time it takes to generate my site and declining traffic made it painfully obvious a change was overdue.

A small form factor, 4-bay DIY NAS featuring a Celeron N5105 CPU, 16GB of RAM, 128GB NVMe SSD, 2.5Gbps networking and TrueNAS SCALE for under $400.

I implement an off-site backup using a Mini PC, a 20TB USB HDD, TrueNAS SCALE, Tailscale, and some space at Pat's house.

I got tired of waiting for my 5-tool Prusa XL pre-order to be honored, so I started shopping around for my next printer.

A suprisingly affordable DIY NAS featuring TrueNAS SCALE, an Intel Celeron N5105 CPU, 32GB of DDR4 RAM, 2x 250GB NVMe SSDs, and room for 7 hard drives (5x 3.5-inch and 2x 2.5 inch).